Why Most AI Tests Don’t Deliver Reliable Results

Last updated on November 3, 2025 at 11:39 AM.Recently, I stumbled upon yet another self-proclaimed AI expert on LinkedIn who proudly explained why she doesn’t use Perplexity for research. The post started with a clickbait headline, hooked me for a second, and then turned into a masterclass in methodological failure. If you’re comparing AI tools without understanding empirical research, you’re not an expert, you’re part of the noise. The post claimed to “compare” ChatGPT and Perplexity.

Why AI Tool Comparisons Are Usually Pointless

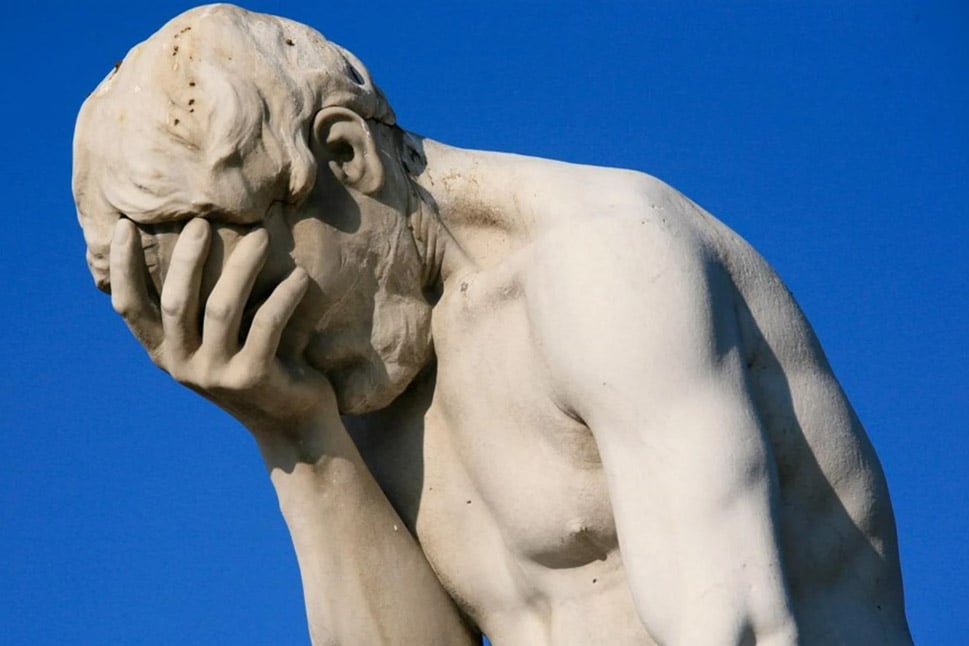

You know the pattern: screenshots, percentages, charts, and a confident conclusion, none of which would survive the first week of an empirical methods seminar. I studied social sciences from 1997 to 2003. I learned the hard way what data quality, validity, and bias mean. Reading that post, I couldn’t help but think: if I were in a position of authority in AI, I’d be very careful with statements like this. Because without proper research design, you don’t look insightful, you look incompetent.

Digital Astrology instead of Research

Let’s be honest: comparing ChatGPT, Perplexity and any other model version against each other in a one-off “experiment” is not research. It’s digital astrology. These tools evolve weekly, outputs change daily and results depend on prompts, context windows and model tiers. A screenshot from April is irrelevant in October. So what’s the value of that kind of comparison? None. It’s pseudo-science. The right question is not “Which AI is better?” but “How can professionals use these tools to improve research outcomes?” That’s what we should be talking about.

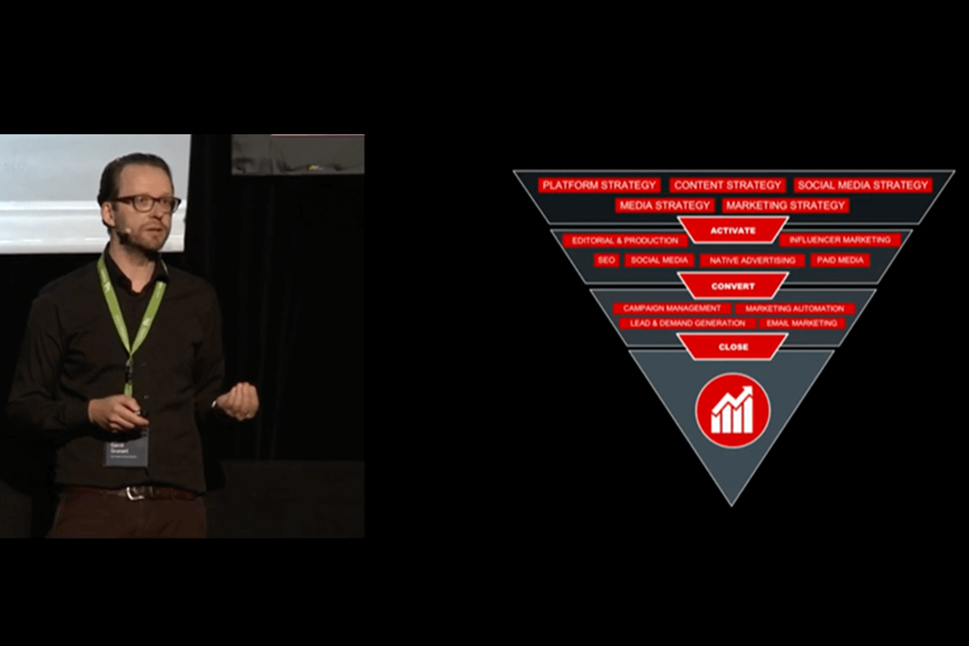

What a Real Comparison Should Look Like

If we really want to compare research performance, let’s do it properly. Let’s compare traditional research work, the one done by human analysts, working students and consultants — with AI-assisted research using tools like Perplexity. And let’s measure what actually matters: time, cost and quality of insight. Because unless you’re running statistically valid tests with control groups and blind evaluations, your “results” are anecdotes, not evidence.

The Price of Free Tools (and False Confidence)

Here’s my theory: the author of that viral post used the free version of Perplexity. And yes, that explains a lot. The free version doesn’t access academic databases, doesn’t include up-to-date sources, and often truncates results. But if you’re calling yourself a professional, especially an “AI consultant” and you rely on free tools for your client work, you’re not saving money. You’re signaling mediocrity. Perplexity Pro costs $20 a month. That’s the equivalent of one mediocre lunch meeting or two rounds of overpriced coffee.

Why €200 a Month Is a Bargain for Intelligence

Now imagine going beyond the basics. For around €200 a month, you can combine premium versions of ChatGPT, Perplexity, Claude, and a few research extensions. That’s not a luxury; that’s a baseline investment in professional capability. Compare this to the cheapest form of human research in your company: an intern. Let’s say you pay them €20 an hour for 20 hours a week. That’s roughly €1,600 a month, plus additional costs. For that same money, you could run professional-grade AI research infrastructure for more than six months. Six months of speed, consistency and documented sources versus one month of manual effort. The math speaks for itself.

The Intern vs. the AI Assistant

But let’s play along with the traditional model. Send your intern to the library. They’ll spend half a day finding books, waiting for a copy machine and reading outdated material. Or have them build a “source pool” from the Big Four consultancies’ latest white papers. That sounds efficient until you realize they’ll spend hours evaluating what’s reliable and what’s fluff. Bravo. But here’s the question: if your intern has the intellectual capacity to critically evaluate sources, why not give them a professional AI tool that filters, summarizes and contextualizes information instantly? AI doesn’t replace thinking, it accelerates it. It’s the intellectual equivalent of giving your intern a high-speed internet connection instead of a fax machine.

Who Should Validate the Results?

Here’s where it gets serious. If your company charges €140 per consulting hour, who’s responsible for validation? Hopefully not the intern. It should be a senior strategist, at least for that price. As a managing director, I’d never allow unverified information to leave my company. And as a client, I’d never pay for it.

That’s why the only sustainable research principle, whether you’re in AI, medicine, or consulting is this: Human first. Human last. The human defines the question, interprets the data and verifies the conclusions. AI tools exist to make that process faster, broader and more precise, not to replace expertise.

The Real Lesson for AI Consultants

And that brings us back to our “AI expert.” Anyone who publicly declares that “Perplexity isn’t reliable” without specifying which version, which dataset, or which prompt was tested, is demonstrating a lack of understanding, not insight. Professional consultants know that tools are neutral, it’s the human process that determines output quality. When you publish unscientific comparisons, you don’t just reveal your ignorance. You damage trust in the very field you claim to represent. You disqualify yourself as a serious consultant.

Invest in Intelligence, Not Ego

AI research isn’t free, but ignorance is expensive. Don’t waste your money on consultants who don’t understand how to design or interpret research. If someone can’t invest €20 in a professional tool, how can they justify charging €200 per hour for their “expertise”? The difference between a credible consultant and a self-proclaimed AI ambassador is simple: one validates sources, the other validates their own opinions.

What AI tools give us is not automation, it’s augmentation. They amplify human intelligence, not replace it. When used properly, they allow professionals to focus on what matters: defining the right questions, identifying patterns, and making strategic decisions. In a world flooded with information, the ability to synthesize insights faster and more reliably is a competitive advantage. That’s not a threat to human work, it’s an upgrade to human thinking.

Final Takeaway: Human First. Human Last. Always.

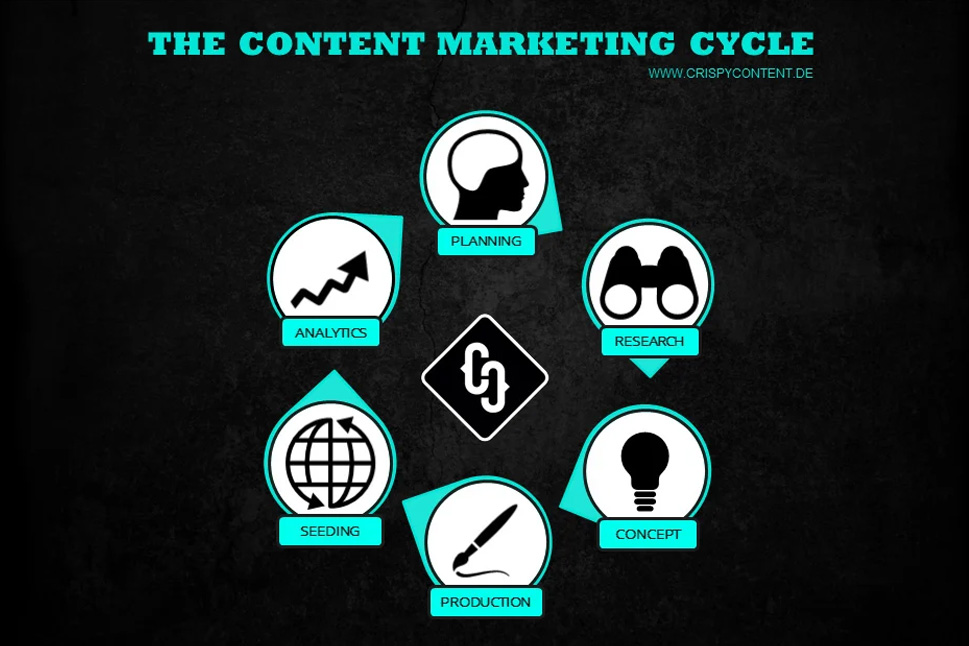

At Crispy Content®, we believe in this principle above all. We don’t outsource intelligence to machines; we use AI to empower human creativity, rigor, and decision-making. We help companies build AI-enabled processes that save time, improve data quality, and enhance the credibility of their insights. The goal isn’t to replace people, it’s to make them more effective. Want to bring real intelligence into your content operations? Let's talk.

Gerrit Grunert

Gerrit Grunert

Gerrit Grunert is the founder and CEO of Crispy Content®. In 2019, he published his book "Methodical Content Marketing" published by Springer Gabler, as well as the series of online courses "Making Content." In his free time, Gerrit is a passionate guitar collector, likes reading books by Stefan Zweig, and listening to music from the day before yesterday.

.png)

.jpg)

-1.jpg)

-1.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)