Google Update: Removal of &num=100 and Its Impact on SEO Tools

Last updated on October 8, 2025 at 13:27 PM.For years, a seemingly insignificant parameter allowed many SEO tools to elegantly bypass one of Google Search’s strictest rules: the limitation to ten results per page. The small suffix "&num=100" in the search URL made it possible to retrieve 100 results at once—an enormous efficiency boost for anyone needing large volumes of SERP data for analysis, monitoring, or AI training. But September 2025 marked a turning point: Google quietly closed this loophole—without warning, without replacement. The industry, long reliant on this convenient workaround, suddenly faced a radical disruption.

On Clever Workarounds and Their Consequences

The rule was clear: Google displays ten results per page. But insiders knew how to extract significantly more data with a simple URL tweak. It was an unspoken agreement, an undocumented trick, a perfect example of the subtle, unwritten rules that shape daily work with search engines. The SEO world thrived on this workaround, building entire business models, monitoring processes, and data pipelines around it. The consequences of an abrupt end, from massive cost increases to data loss were long underestimated. Until now.

The Importance of Comprehensive SERP Data for Competitiveness

Many companies and service providers relied on this broad data extraction to deeply analyze their own visibility or that of their competitors. Visibility indices, market observations, AI training data all were based on capturing as many rankings as possible per keyword. The objective was not just to understand one’s Google ranking at the surface level, but also in the deeper positions, which are crucial for long-tail traffic, niche markets, and competitive analysis.

Precision and Transparency in Reporting Are at Stake

With the end of the ability to query 100 results at once, the landscape is shifting. Tools now have to send ten times as many requests to obtain the same data or reduce the depth of their reports. The result: reports that previously included the top 100 rankings may now only cover the top 20 or 50. Strategic decisions lose precision. This raises the question: How can transparency and data quality be maintained when the most important data sources suddenly provide less depth?

Technical Challenges and Economic Impacts

The technical impact is significant. Monitoring and analytics providers such as Ahrefs, Semrush, and Sistrix have had to overhaul their entire infrastructure. Ten times more requests mean higher server loads, increased costs, and slower updates. Some providers have reported temporary outages and inaccurate reports during the transition phase. Additionally, those wishing to maintain access to deeper rankings must pay either by allocating more internal resources or by purchasing alternative data sources.

A Look at the Tool Landscape: Who Is Responding and How?

Some providers are responding flexibly, seeking new workarounds; others are reducing the scope of their analyses. A prominent case: As reported by the US-based GTMA Agency, several major SEO tools temporarily reduced their reporting depth to offset the increased load. Behind the scenes, developers are searching for efficient scraping strategies, alternative APIs, or partnerships that could restore access to deeper data. The SERP data market is in flux and those who react too slowly risk performance losses and damage to their reputation.

Impact on AI Ecosystems and Data Aggregation

The Google update also affects the development of large language models (LLMs) and generative AI applications. The ability to quickly and affordably extract large volumes of search data was a cornerstone for many AI projects. With the new limitation, data acquisition costs are rising, and training and update cycles are slowing down. Those who want to continue accessing current, broad search data must find new approaches or make do with smaller datasets.

Why the Change Doesn't Affect Everyone Equally

Those who have relied on automated bulk queries now face the challenge of rethinking their processes. Small businesses using affordable standard tools may only notice changes later when their reports suddenly become less comprehensive, or rankings appear to "improve" simply because lower positions are no longer tracked. Data-driven marketing and analytics departments, on the other hand, feel the difference immediately: they lose insight into the lower ranks, where relevant niche topics and new competitors often emerge.

A Behind-the-Scenes Look: The GTMA Agency Case

The GTMA Agency provides a prime example of how disruptive these changes can be for data-driven marketers. Immediately after the deactivation of the "&num=100" query, they recorded a drastic drop in impressions in Google Search Console: the number of reported rankings plummeted because their bot could no longer capture lower positions as before. Simultaneously, average ranking positions seemed to improve a false impression, since a significant portion of the data was now simply missing. The agency had to quickly adapt its reporting infrastructure, overhaul client communications, and test alternative data collection methods. After several weeks and additional investment, they managed to restore their reports to an acceptable level.

New Methods and Innovative Solutions

The industry stands at a crossroads. Instead of relying on classic scraping techniques, alternative approaches are coming into focus: partnerships with data providers, AI-powered estimation methods, combining multiple sources, or building proprietary data pools. In the AI development sphere, new approaches are emerging to work with fewer, but higher-quality, data points. The key is to keep strategies flexible and invest in technological innovation early.

Recommendations for Businesses

To remain competitive in Google rankings and make informed decisions, companies should consider the following actions:

Review Processes and Tools

- Regularly evaluate existing monitoring and reporting processes

- Choose vendors that adapt to the new data landscape and communicate transparently

- Identify and, if necessary, expand proprietary data sources and interfaces

Analyze Technical and Economic Impacts

- Assess the cost-benefit ratio of current tools

- Explore alternative data sources and partnerships

- Include investments in AI and data science in the budgeting process

Establish New Approaches to Data Management

- Develop creative methods for data aggregation

- Strengthen internal expertise in data analysis and AI

- Monitor trends in Google updates and search data policy, and respond proactively

Why Expertise and Creativity Matter Now

At a time when established rules are being rewritten, adaptability and industry knowledge are more critical than ever. The changes brought by the Google update highlight one thing: relying on existing processes risks blind spots and competitive disadvantages. Companies that combine creativity with analytical expertise will discover new paths and secure a sustainable advantage, even as Google changes the rules again.

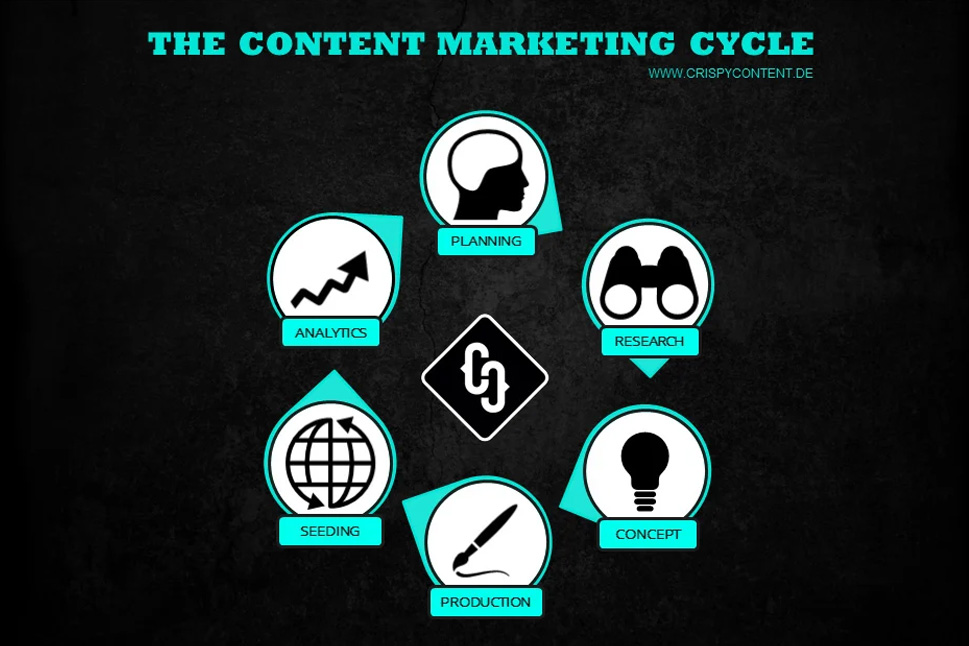

Creative, smart and talkative. Analytical, tech-savvy and hands-on. These are the ingredients for a content marketer at Crispy Content® - whether he or she is a content strategist, content creator, SEO expert, performance marketer or topic expert. Our content marketers are "T-Shaped Marketers". They have a broad range of knowledge paired with in-depth knowledge and skills in a single area.

.png)

.jpg)

-1.jpg)

-1.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)